Funny how everything eventually comes full circle. A mere thirty-some years ago, the state of the art was client-server computing where one or more client nodes were directly connected to a central server.

As much as enterprise companies embraced client-server, there were limitations: data integrity risks, scalability, but more importantly network traffic processing. When high traffic occurred, the server suffered a “denial-of-service.”

Then, distributed architecture helped solve these limitations. Interconnected PCs distributed the workload, computation power, and storage — the result: reduced security risks and reduced network traffic, and a completely scalable architecture.

Today, digital transformation across the enterprise and the pervasive use of connected devices among consumers has created even greater issues of scalability, data and security risks, and massive network traffic. The solution across every enterprise was to move everything to the cloud.

Enter Edge Computing

It’s easy to relate cloud computing to client-server architecture. Consider edge computing as an extension of distributed architecture where you are taking the ‘computation’ closer to the source of data generation, at the edge.

Why is this important now?

We live in a “smart,” connected world: phones, HVAC, cars, smart speakers, game consoles, factories, even cities. All these things are adding to the volumes of data we generate and consume on the internet.

(Fun Fact: The number of devices connected to the Internet of Things (IoT) surpassed the number of smart phones and personal computers some time ago and will overtake the number of people on the planet this year. Statista estimates that over 75 billion devices will connect to IoT by 2025. (https://www.statista.com/statistics/471264/iot-number-of-connected-devices-worldwide/)

Cloud computing is fine if data and computation are in the same location with limitless flexibility for accessing, storing, and computing data. However, the amount of data and compute speed have become so crucial that any delay is unacceptable. In fact, latencies cost Amazon and Google (and their customers) millions. Consider that:

“Amazon found every 100ms of latency cost them 1% in sales. Google found an extra .5 seconds in search page generation time dropped traffic by 20%. A broker could lose $4 million in revenues per millisecond if their electronic trading platform is 5 milliseconds behind the competition. https://www.presstitan.com/100ms-latency/

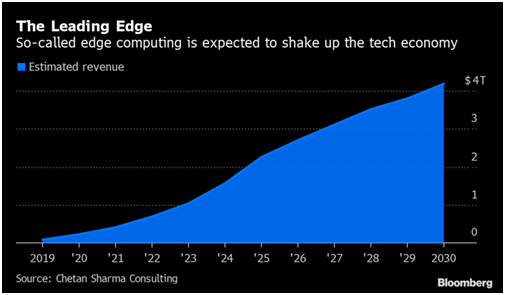

Not surprising, many pundits predict that telecom will most likely be the first, major benefactor of edge computing. Telecom companies are building 5G networks, and they control access to high-speed networks through which mega volumes of data flow through cell sites and towers. Being able to collect and analyze this data at the source in real-time creates enormous advantages for wireless carriers. The opportunity for this industry sector and others (shipping lines) is so lucrative, that telecom analyst Chetan Sharma in a recent Bloomberg Business Week article predicted that edge computing could be a $4T market by 2030.

RTView with its distributed architecture of collecting, managing, and visualizing data at the edge is an ideal solution for telecom, IoT and other use cases where analyzing data at the edge is critical. Therefore, we have partnered with Machbase, a time-series database, so that we can also store data at the edge. Machbase has developed a lightning fast time series database with in-memory technology for maximizing data entry, columnar DBMS technology for optimizing analysis, and search engine technology for real-time search.

To learn about IoT and messaging systems monitoring, go to: https://rtview.com/products/rtview-cloud-for-iot/

For a free 30-day trial of RTView Cloud, please visit: http://rtviewcloud.rtview.com/register

To learn more about MachBase, please visit: https://www.machbase.com/machbase